On December 21st, the 2010 GPU High Performance Computing Summit was held in Beijing this morning. NVIDIA company founder/CEO Huang Renxun personally attended the keynote speech. This is the first time NVIDIA hosted the GPU High Performance Computing Forum outside the United States.

On December 21st, the 2010 GPU High Performance Computing Summit was held in Beijing this morning. NVIDIA company founder/CEO Huang Renxun personally attended the keynote speech. This is the first time NVIDIA hosted the GPU High Performance Computing Forum outside the United States.

Huang Renxun said at the meeting that HPC supercomputers/high-performance computing are now used in various fields such as biomedicine, geological exploration, automotive design, medical imaging, finance, product design, and weather, in addition to traditional research, military, and other uses. Forecasts and more.

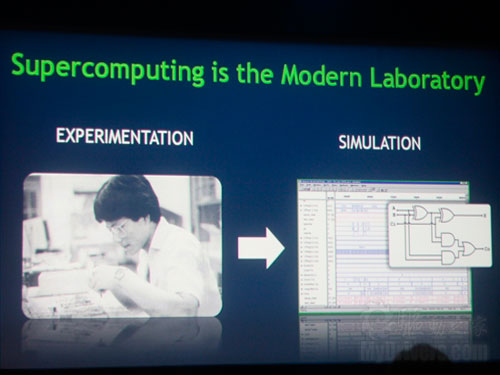

Taking a chip design company like NVIDIA as an example, designing microelectronic products 30 years ago requires a large amount of assembly of physical components for testing. Today, all of NVIDIA's GPU designs are done through simulations on high-performance computers.

Everyone should have guessed that the young man who designed the circuit in the lab was the 19-year-old Huang Renxun who was a senior in 1982 at Oregon State University.

NVIDIA spends more than $100 million a year upgrading and maintaining its own supercomputer hardware. At present, the aircraft has more than 40,000 CPU cores, and most of its computational performance is used in Verilog circuit design simulation.

In the diversified application background, NVIDIA believes that high-performance computing will evolve from traditional x86-based servers to three different directions based on application requirements. Among them, enterprise servers will still be dominated by x86 architectures, requiring a lot of simple computing. The demanding web servers will use energy-efficient ARM architecture products, while the supercomputers that focus on massively parallel computing will move toward the GPU.

This point can be seen from the trend of global supercomputer computing capabilities. In the past 10 years or so, the speed of the total computing performance of the TOP500 world's fastest supercomputer has basically kept pace with Moore's Law. Around 2007, the performance of the TOP500 supercomputer began to increase significantly. This point in time is exactly where the GPU was introduced into the supercomputer.

In previous years, we only had the opportunity to see supercomputers in government-supported supercomputing centers or research institutes. Now with the decrease in the cost of supercomputers brought about by GPU-accelerated applications, more companies are beginning to have opportunities to use HPC, and they are really using high-performance computing in industrial applications.

Here, Huang Renxun gave a very interesting example. The all-inclusive Procter & Gamble Company has applied high-performance computing to the design and development of various products. For example, using HPC to design the curvature of the customer's potato chips, it makes a perfect "flying" in the production line. The design of other products such as coffee cups, shampoos, etc. are also used in a large number of supercomputers.

In this supercomputing revolution, the best solution scientists have found today is to use CPUs in combination with GPUs. The CPU performs the sequential operations while the CUDA GPU, which integrates a large number of cores, performs the parallel computation.

A typical example of this "heterogeneous computing" application is China's Tianhe No. 1A, which uses more than 14,000 CPUs, supplemented by 7,168 Tesla M2050 GPUs, and a total computing power of 2.5 PFLOPS, making it the world's strongest supercomputer today. Tian Chan No.1 chief designer and director of the Department of Computer Science and Technology Department of National University of Science and Technology of the People's Liberation Army Yang Chanqun attended the design process of the aircraft. Allegedly, from last year's experimental system to this year's Tianhe No. 1A, they have increased the efficiency of GPU acceleration from 20% to 70%, while using the self-developed node network system, the scale and performance are imported sets used last year. Double the network system.

At present, in the TOP500 global supercomputer rankings, the Tianhe 1A, the third nebula and the fourth Japanese Tsubame all use the Tesla GPU.

And if you consider the power consumption of these supercomputers, the advantages of GPU acceleration systems are even more obvious.

In the green supercomputer rankings, the top ten are Tesla-accelerated and the remaining three are IBM Blue Gene Systems. The Jaguar energy efficiency ranked second in the TOP500 ranks 81st.

Compared with special supercomputer systems such as blue genes, CUDA GPU computing also has a distinct advantage, that is, CUDA systems can be found everywhere for programming and development. In the field of supercomputers, the number of vendors offering CUDA GPU-accelerated commercial products has approached zero since 2009 and has soared to nearly all of this year.

Finally, it is natural to look forward to the future. As planned in the GTC announced at the end of September, NVIDIA will release Kepler next year and 2013 Maxwell, which will have 16 times the performance of Tesla 2007 when power consumption remains unchanged or even lower.

In the future, there will be the Echelon plan of 2018, which will be used in tens of billions of computers. The same power consumption performance is 100 times that of the Fermi architecture.